MapViewer

MAKE THE MOST OF YOUR SPATIAL DATA

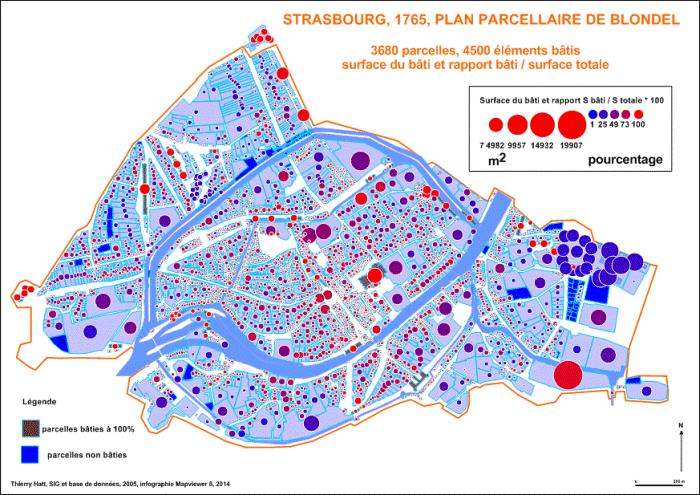

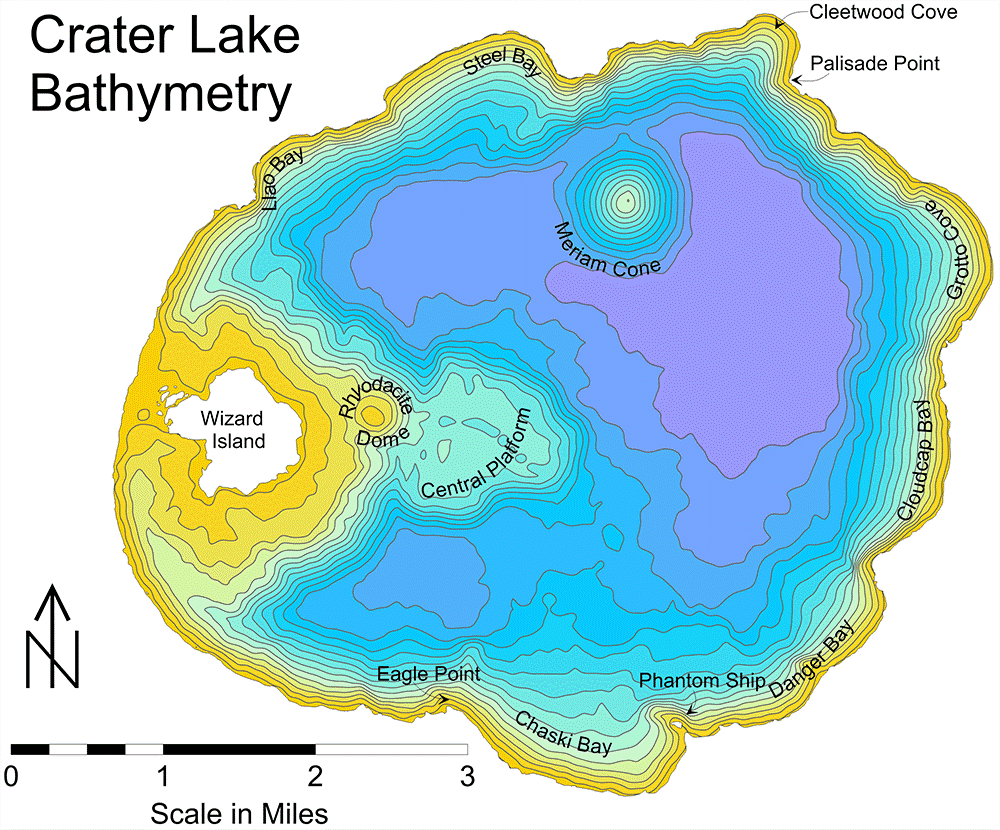

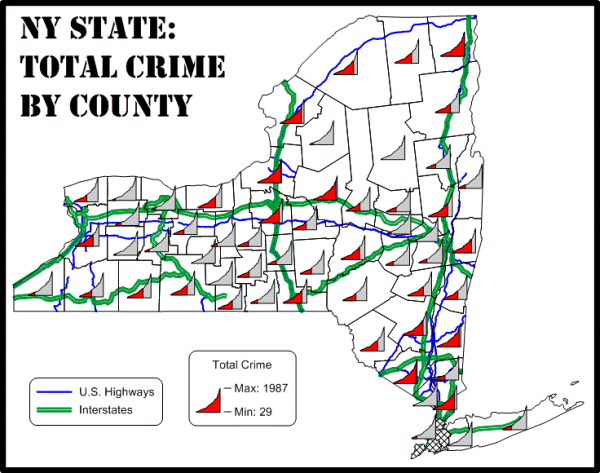

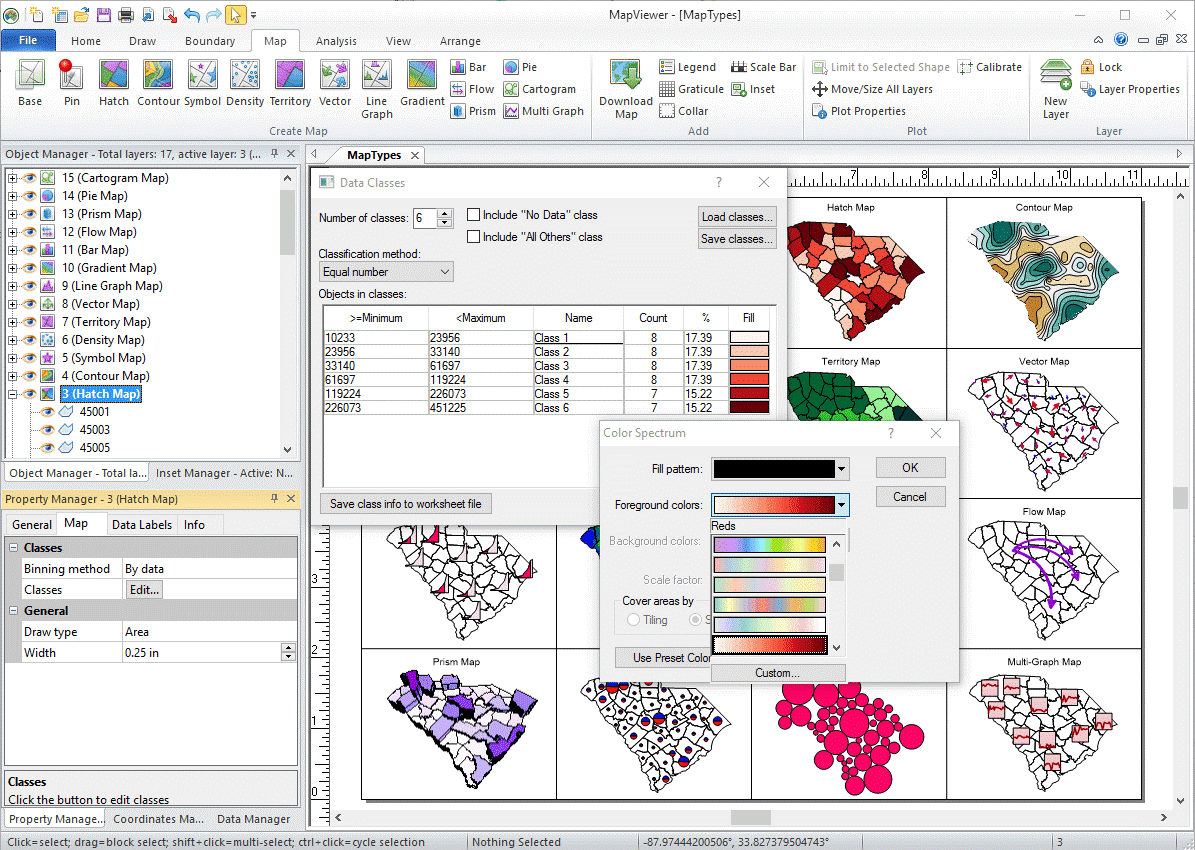

Create Professional Thematic Maps

Take control of your spatial data. MapViewer’s powerful mapping abilities transform spatial data into informative thematic maps. The flexible map display, instant customizations, and advanced analytics make MapViewer a go-to tool for GIS analysts, business professionals, and anyone dealing with spatially-distributed data.

MapViewer Map Types

- Base

- Pin

- Choropleth

- Contour

- Symbol

- Density

- Territory

- Vector

- Line Graph

- Gradient

- Bar

- Flow

- Prism

- Pie

- Cartogram

- Multi-graph

Enhance Maps

Create maps that are as beautiful as they are intelligent. MapViewer’s extensive customization options let you create maps to clearly communicate your message.

MapViewer Customization Options

- Add titles, legends, scale bars, graticules, map collars, and insets

- Apply linear color scales

- Edit all axis parameters

- Define custom line styles and colors

- Add text, polylines, polygons, symbols, and spline polylines

- Edit text, line, fill and symbol properties

- Include multiple maps in a display

- Adjust tilt and rotation

Make Informed Decisions

Create maps that are as beautiful as they are intelligent. MapViewer’s extensive customization options let you create maps to clearly communicate your message.

MapViewer Boundary Editing Tools

- Reshape, clip, smooth polylines, and polygons

- Create buffers around points, polylines, and polygons

- Convert between polygons and polylines

- Create new polygons by combining existing polygons that overlap or share a border

- Create points or polygons at areas of intersection for overlapping areas

- Connect or break polylines at specified locations

- Combine and split islands

MapViewer Analysis Tools

- Query map data and attributes

- Generate various map and object data reports

- Evaluate spatial relationships and distances

- Perform geocoding

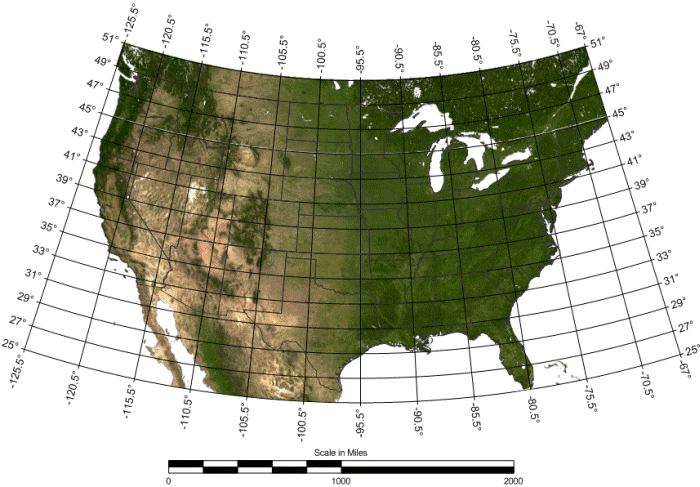

Work Seamlessly With All Coordinate Systems

MapViewer makes it easy to transform spatial data into informative maps. MapViewer effortlessly manages unreferenced data and data projected in different or multiple coordinate systems.

MapViewer Coordinate System Features

- Over 2500 predefined coordinate systems

- Create custom coordinate systems

- Search coordinate systems by name or EPSG number

- Reproject coordinate systems

- Over 80 ellipsoids

- Over 45 predefined linear units

- Create custom linear units

- Use pre-defined or custom datums

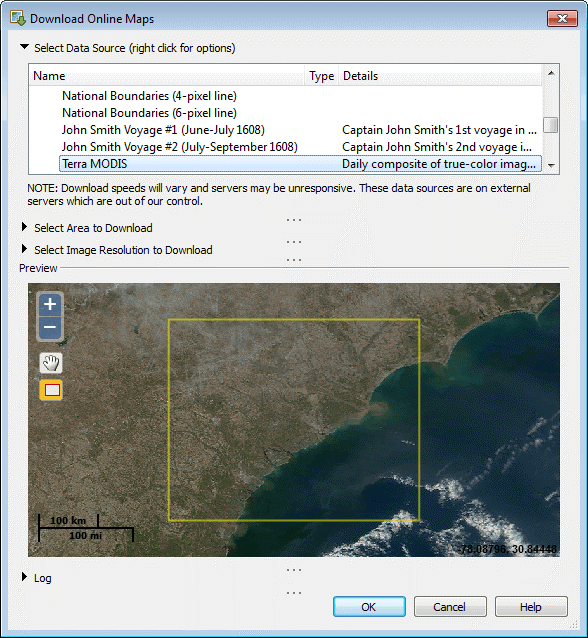

Immediate Access To Online Data

An abundance of data is at your fingertips waiting to be visualized. MapViewer gives you immediate access to maps downloaded from any online web mapping services (WMS), public or private.

Complete Compatibility

Seamlessly visualize and analyze data from multiple sources. MapViewer natively reads numerous file formats including SHP, DXF, and XLSX. MapViewer also supports many popular export formats.

Collaborate With Confidence

Share your work with clients, colleagues, and stakeholders. Maps are ready for printed publication with high quality export formats such as PDF or TIFF. Alternatively, share your work online with web-compatible formats like JPG or PNG. If you are preparing for a presentation, simply copy and paste your map into presentation tools such as Microsoft PowerPoint or Word.

Streamlined Workflows

MapViewer’s intuitive user interface allows you to go from raw data to informative map in minutes.

MapViewer User Interface Features

- Single window to view, edit, and manipulate the data and maps

- Object manager to easily manage map layers and objects

- Property manager for quick feature editing

- Worksheet window to view or edit raw data

- Dock or float all managers

- Customize ribbon bar tabs

- Welcome dialog to get you started

- Customize practically all components of the user interface to fit your needs

Work Smart, Not Hard with Automation

Don’t waste time doing the same process over and over. Create scripts to automate repetitive or recurring tasks. MapViewer can be called from any automation-compatible programming languages such as C++, Python, or Perl. MapViewer also ships with Scripter, a built-in Visual Basic compatible scripting tool.

Save time with automation, and save even more time by reviewing the extensive set of sample scripts in the MapViewer Automation Knowledge Base!